Problem definition

We would like to leverage existing openfoodfacts database to see if it's possible to use mandatory nutritional values to estimates nutri-score on food and beverages.

After reading meaningful quantity of literature regarding the nutri-score, we made the hypothesis that 6 mandatory nutrional factor are enough to provide a robust estimate

of the score, which ranges from A (highest mark) to E(lowest mark). As a result we have started this application linked at the top of this page to prove that such approach makes

sense, and gives good results.

Retrieving our data

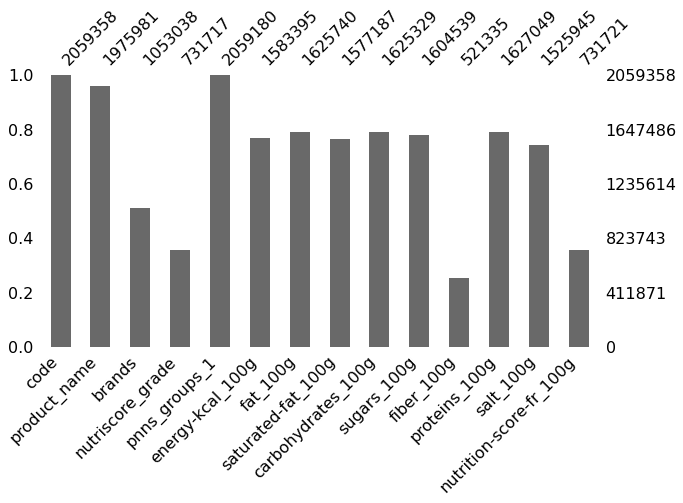

We will rely on the open database from openfoodfacts to start our cleaning process. Database available here. However, as you will quickly notice when using the database, we have a lot of issues.

Among others, here is the list of major items that we need to keep in mind:

- - There is a lot of missing data

- - There are a lot of data with absurd values

- - Some entries are just false

- - A lot of columns of the dataset are useless for our problem at hand

We hightlight the emptyness of the dataset with following graph

We adress the above mentioned challenges by taking the following decisions:

- - We start simple but conservative: we only keep data where Nutri-score is already available. That is roughly 350k datapoints

- - For illegal values we differ the cases. Negative values are replaced with 0s. Above 100 values, are replaced with median.

- - Not all features are going to be useful in this process

We have a database with no errors or missing data, at the end of the process

Running the models

We tried two approaches to tackle this problem given its structure:

- - Regression : First calculate numerical nutriscore, then deduce the grade from that score

- - Classification : Calculate the grade straight away via OneVsRest

Our results show that classification seems to be more robust to border line products: products that are close to a limit between two classes. For that reason we will favor it.

Among available models, we choose to try Logistic Regression, Random Forest, XGBoost, and Neural Nets. We will use precision and recall as our metrics for decision. Note that accuracy might not be very useful for this dataset, as we have class imbalance.

Our analysis shows that Random Forest and XGBoost are the most performant models, with 90% precision and recall. Given similarity of result, we will favor the one with the lowest training time, which is Random Forest.

Production & Next steps

This model is now in production in the HuggingFace model available at the top of this page. Note that this project is still in works, as there are many things still to be done yet. Also, I know that I am a bit shy of graphs (although I love them !) for this article, but I will add more as soon as I can.