Problem definition

We would like to leverage standford dogs dataset to build a dog breeds classification algorithm. The user would submit a picture to the app, and it would return the predicted breed

We would like to approach this problem using two approaches:

- - Building our own CNN network from scratch

- - Using transfer learning with VGG, Xception or Resnet50

In this article, we are going to have a heavy use of Tensorflow and GPU abilities, therefore we recommend using Google Colab when doing this exercice

Retrieving our data

We will rely on the open database from stanford. Database available here. Note that we will only use the annotations and Images folder of that dataset.

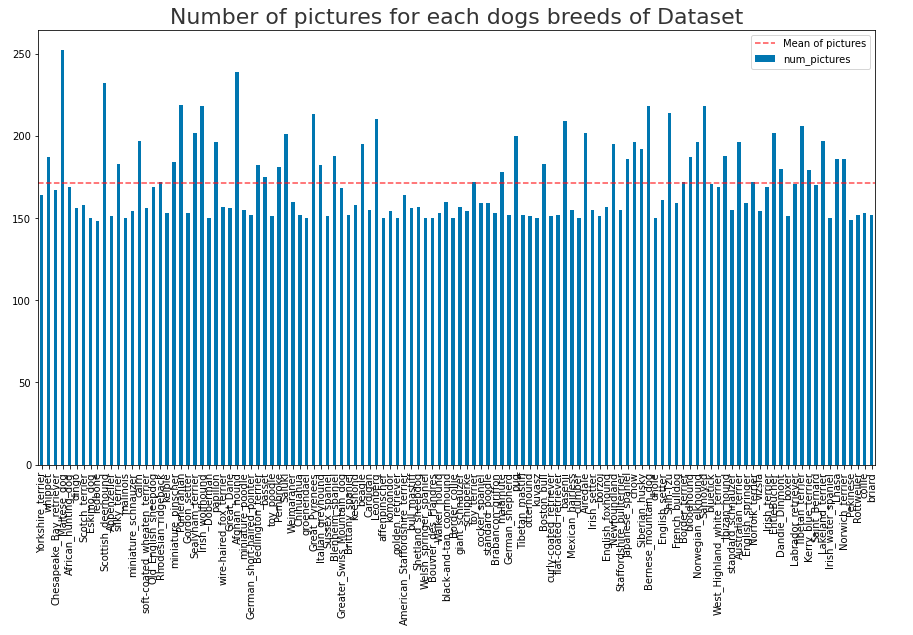

We highlight the number of pictures and classes with the following graph:

We have 120 classes (dog breeds) in total, and all classes seems to be quite balanced in terms of frequency in the dataset. Therefore, a metric such as accuracy would be relevant for this dataset

Preparing the data

In Image processing, there are heavy preprocessing to be done, especially when images are heteregeneous in size and quality. We choose to do the following image processing to the data:

- - Resizing

- - Histograms

- - Non local means filter

- - Data Augmentation

- - Reduce the number of classes

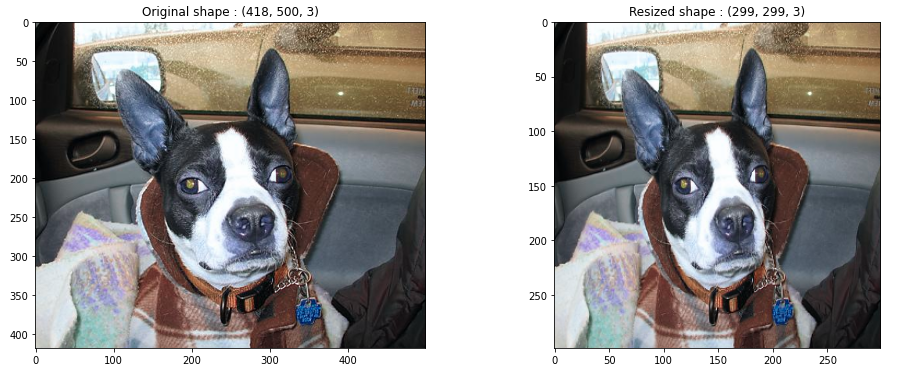

Picture resizing

We need all the pictures to be identical, we choose 299x299 as a suitable format.

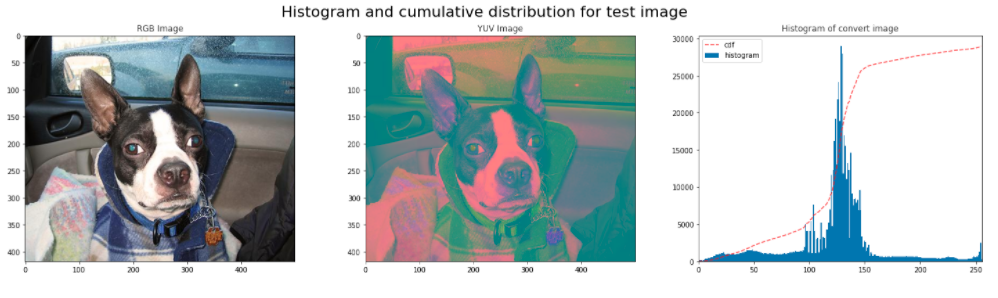

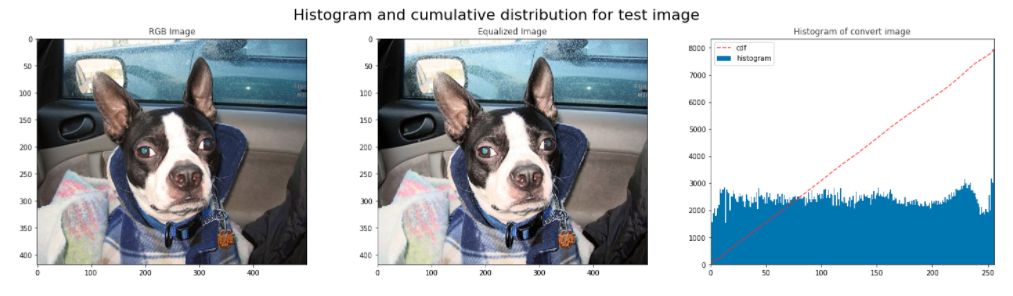

Histograms

We are going to convert our RGB color format image to a YUV (gray scale) format in order to see any light imbalance in our test picture.

Equalization

Quite obvious from previous chart that our test picture has light imbalance, we need to relabalce that thourgh equalization

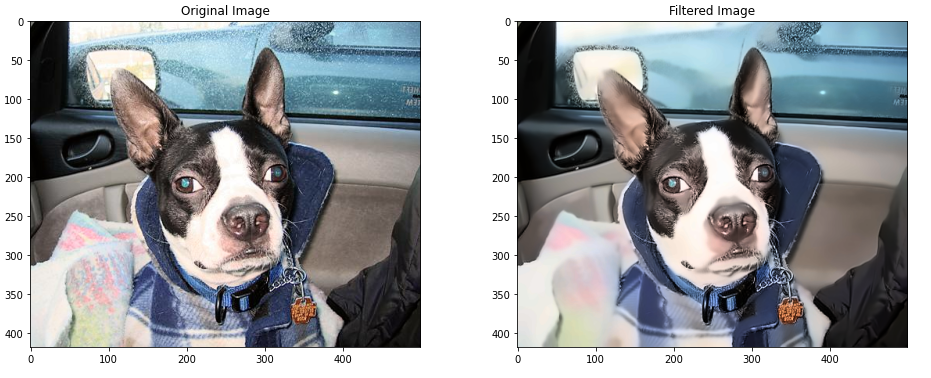

Non local means filtering

We are going to help our algos by applying denoising with this filter. It is going to smooth the image, and probably fasten our training a bit and increase the quality of our training.

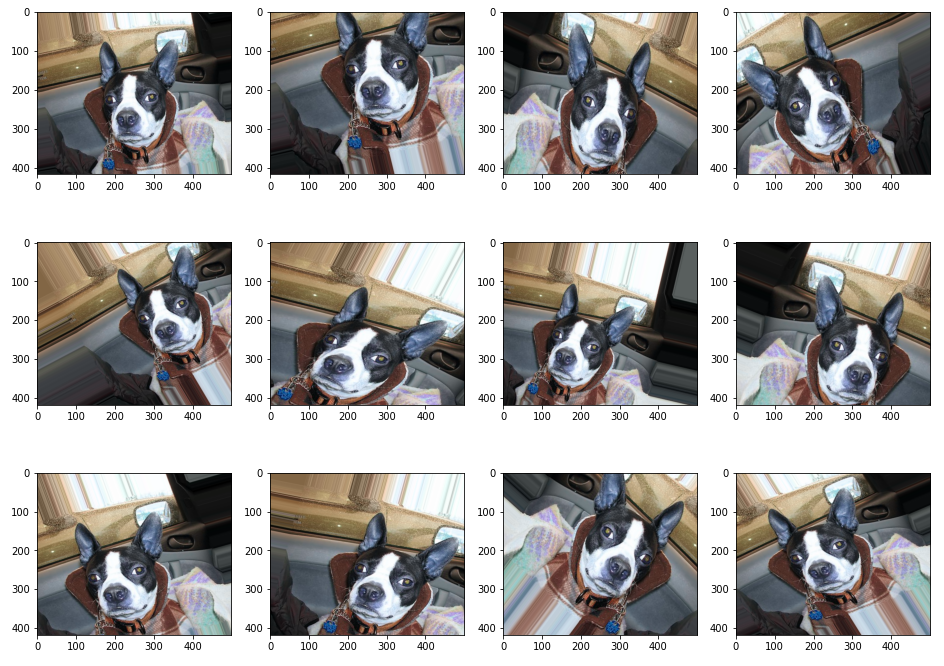

Data Augmentation

We are going to simulate artificial data by augmenting our data using ImageDataGenerator: rotation, zoom, etc... Please refer to GitHub repository to see more details about the code

Running the models

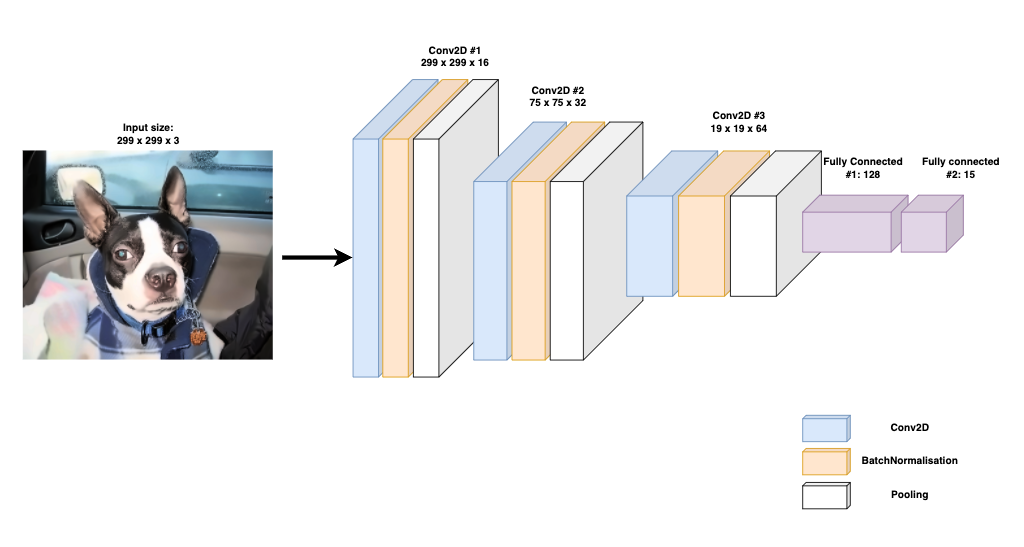

Building our own CNN

We used some kind of combo between VGG-16 and ResNet to try our own CNN. In the image below we show our structure:

We run our model using GPU power, and we also want to hypertune our parameters using Keras Tuner, especially we are going to tune learning rate and Beta_0 parameter in Adam loss function. For further details see the repo

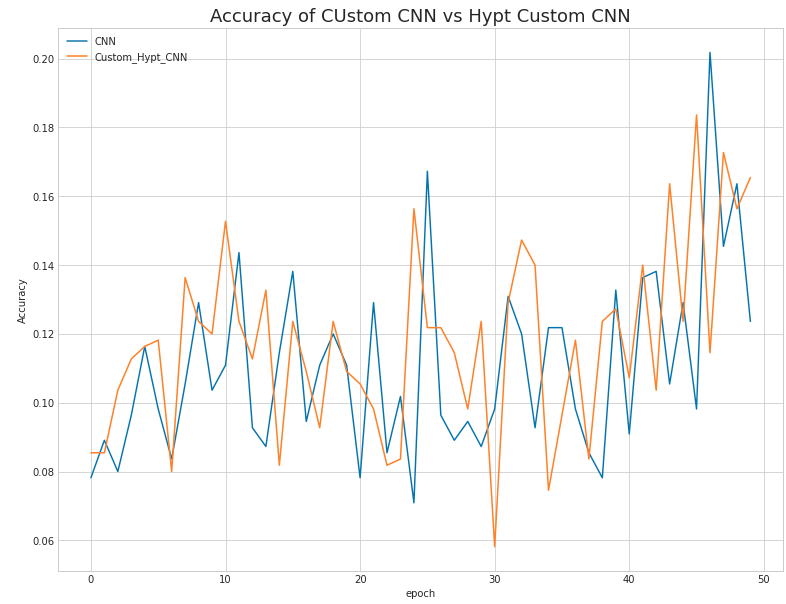

In the chart below, we show the validation accuracy for our base CNN and our tuned CNN. Results are a bit disappointing, let's have a look to transfer learning.

Transfer Learning

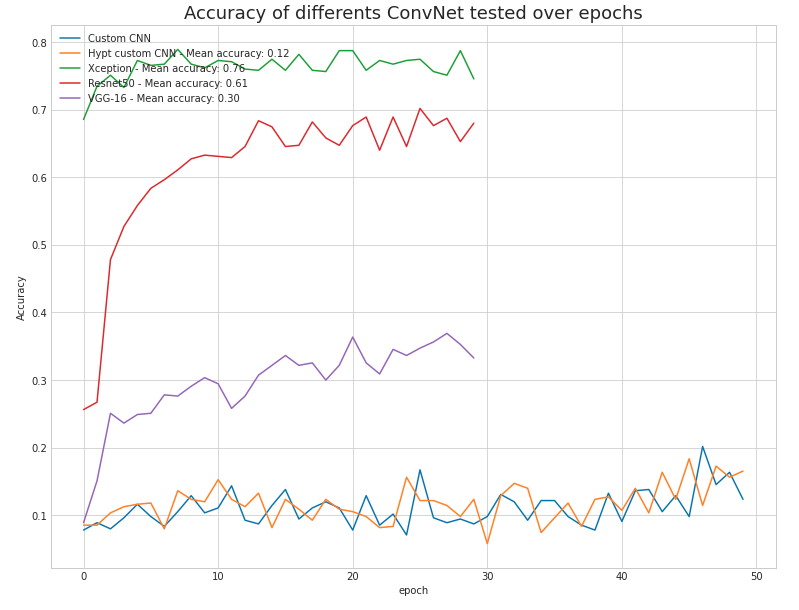

We are going to use-pretrained weights for VGG-16, Xception, and ResNet for ImageNet database. This is very easy to do with TensorFlow framework. We will then try to train some part of the weights for the model that has the best performance. We show the validation accuracy for the differents models in the chart below:

We notice that:

- - Models have much better performance than our custom CNN

- - Xception is the model that has the best peroformance

- - We can train these models on a limited number of epochs

Therefore, we are going to select Xception as our main model, and retrain the weights in the exit flow (the very high level weights), to try to add more performance. Results are quite mixed as the hypertuning does not add much performance. For more details, please refer to the GitHub repo

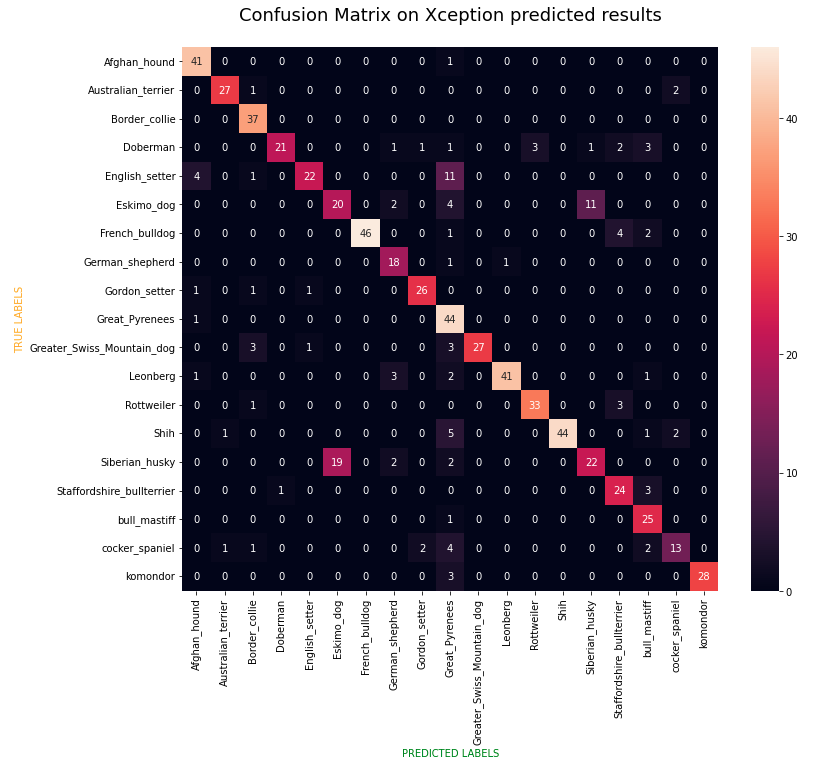

Testing Xception on our test set

We show the results with a confusion matrix on the chart below. We can see that very few classes have classification errors. Some errors are specific to couple of classes because of the proximity of the two dog breeds in terms of colors ! But otherwise, the results are quite impressive !!